I'm a Roboticist and Applied Scientist at the Boston Dynamics Robotcs & AI Institute , where I focus on developing advanced robot hands, tactile sensors, and manipulation algorithms. Previously I was a graduate student at Carnegie Mellon's RoboTouch Lab, under the guidance of Dr.Wenzhen Yuan. My research there centered on using tactile sensing to enable robots to perform complex industrial assembly tasks, such as handling flexible components like cables and inserting connectors.

Prior to CMU, I was at MIT, where I was advised by Dr.Edward Adelson in the Perception Science Group at CSAIL. My work at MIT focused on developing GelSight sensorized robot hands. I've also done research at the Robert Bosch Centre for Cyber-Physical Systems (RBCCPS) at the Indian Institute of Science (IISc), Bangalore, under the mentorship of Dr.Bharadwaj Amrutur. Here, I led the joint IISc-MIT team in the Kuka Innovation AI Challenge 2021.

I am also the co-founder of Sastra Robotics, which focusses on building robots for automated functional testing of devices. At Sastra, I led the R&D and production teams, designing hardware prototypes, developing real-time embedded systems, and writing robotics and computer vision software that continue to be commercially utilized.

My expertise includes:

- Developing controllers for robotic manipulation

- Real-time embedded systems and low-level hardware controllers

- Computer vision and machine learning

- Python/C++ application development

- ROS (Robot Operating System), DRAKE

- Industrial robot programming for UR, Kuka, and Motoman robots

News

- [March 2025] Workshop on Acoustic sensing for robots at ICRA-2025 : RoboAcoustics

- [Feb 2025] Work on tactile sensor design using physically accurate light simulator published in Nature

- [July 2023] I have joined the Boston Dynamics Robotics & AI Institute as a Roboticist/Applied Scientist!

- [Jan 2023] Our new paper (Cable Routing and Assembly using Tactile-driven Motion Primitives) is accepted for ICRA 2023.

- [Jan 2023] First stable release of Easy_UR - a library to control UR series of robots

Education

Graduate Student, MechE- Robotics and Controls

Carnegie Mellon University (Sep 2021 – May 2023, Pittsburgh, US)

Courses: Machine Learning (24-787, also TA'ed), Mechanics of Manipulation (16-741), AI for Manipulation (16-740), Computer Vision (24-678),

Statistical Techniques/Reinforcement Learning for Robotics(16-831), Robot Cognition for Manipulation(16-890), Optimization (18-660), Bio-Inspired Robotics(24-775).

Massachusetts Institute of Technology (Oct 2018 – Oct 2019, Cambridge, USA)

Courses: Introduction to Robotics (2.12), Robotic Manipulation (6.881)

Govt. Engineering College, Sreekrishnapuram (Calicut University) (Sep 2008 – May 2012, India)

Courses: Electronic Circuits, Control Systems, Embedded Systems, Communication Systems

Experience

Graduate Research AssistantRoboTouch Group, Robotics Institute, CMU, Sept 2021 – Present, Pittsburgh, US

- Developed tactile driven manipulation primitives and skills for cable routing and assembly tasks.

- Developed fabrication and calibration techniques for curved GelSight fingertip sensors aided by simulation.

- Implemented tactile (GelSight) based hybrid force - position controllers for UR5 Robots.

- Developed and maintained the manipulation pipeline (including easy_ur) used for various other projects in the lab.

Robert Bosch Center for Cyber-Physical Systems, Indian Institute of Science, Sept 2020 – Sept 2021, Bangalore, India.

- Led Team MIT-IISc on the Kuka Innovation Award - AI Challenge 2021, and made into one of the five finalists worldwide

- Designed and fabricated a two-finger robotic hand featuring GelSight tactile sensing on the inner surface and a close-range proximity sensor array on the outer surfaces. The hand was capable of performing in-hand object rolling, enabling the gathering of tactile data over extended object surfaces.

- Explored reinforcement learning algorithms to explore and pick up objects in vision denied environments using tactile & proximity sensing data.

- Implemented model free non-prehensile object manipulation techniques on Kuka IIWA robot.

- Trained graduate students on programming Kuka, Motoman robot manipulators and on GelSight fabrication.

Perceptual Science group, CSAIL, Massachusetts Institute of Technology, Oct 2018 – Oct 2019, Cambridge, USA

- Developed a fully actuated robotic hand with multi-sensory feedback, featuring several GelSight tactile sensors. The design encompassed the mechanical system, electronics, and software for data capture and processing. The hand was capable of limited in-hand manipulation.

Sastra Robotics, Aug 2013 – Sep 2018, Cochin, India

- As the technical co-founder, designed and built the drive systems, motion controllers and user software for SCARA and 6 axis robots for device testing applications

- Developed robot force controllers to guarantee the safety of tested devices and a sensor-equipped finger to test the haptic feedback felt on touchscreens.

- Created computer vision systems to analyze device states from LCD screens, dials, switches, knobs etc

- These robots reduced device testing time for OEM clients by up to 70%.

- Hired, trained, built and managed a team of engineers and technicians who were involved in R&D, product design and production.

- Designed scalable hardware and software architectures.

- Created product roadmaps incorporating market demands through collaboration with customers and incorporating their feedback.

Asimov Robotics (Energid Technologies), June 2012 – July 2013, Cochin, India

- Developed ROS & LabVIEW interfaces for Cyton Gamma 7DOF manipulator through Energid Actin framework.

- Designed electrical subsystems, motor controllers and sensor interfaces for a humanoid social robot.

- Implemented ROS based manipulation pipeline for a custom bi-manual dexterous manipulator.

- Reviewer RA-L, IROS, IEEE-RAM, AIR-2019

- Program Committee Member - Advances in Robotics (AIR-2019)

- Voting Member - Bureau of Indian Standards: Automation Systems & Robotics Committee.

- Industry Member in the drafting the Robotics & Automation Roadmap for India, IEEE-SA Industry Connections Workshop 2017.

- Numerous introductory talks/workshops on robotics in colleges in Kerala, India

Publications

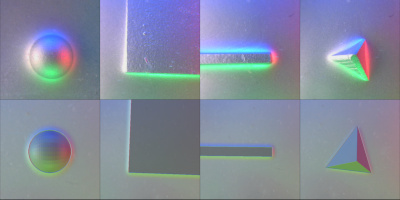

- Vision-based tactile sensor design using physically based rendering Arpit Agarwal , Achu Wilson , Timothy Man , Edward Adelson , Ioannis Gkioulekas & Wenzhen Yuan - in Nature Communications Engineering volume 4, Article number: 21 (2025)

- Cable Routing and Assembly using Tactile-driven Motion Primitives. Achu Wilson, Helen Jiang, Wenzhao Lian, Wenzhen Yuan - (accepted for IEEE International Conference on Robotics & Automation (ICRA), London, 2023) VIDEO

- Design of a Fully Actuated Robotic Hand With Multiple Gelsight Tactile Sensors. Achu Wilson, Shaoxiong Wang, Branden Romero, Edward Adelson, RoboTac Workshop, International Conference on Intelligent Robotics and Systems (IROS), Macau, 2019 VIDEO

- GelSight Simulation for Sim2Real Learning. Daniel Fernandes Gomes, Achu Wilson, Shan Luo, ViTac Workshop, IEEE International Conference on Robotics & Automation (ICRA), Montreal, 2019.

- Design and Development of a Magneto-Rheological Linear Clutch for Force controlled Human Safe Robots. Achu Wilson, IEEE International Conference on Robotics & Automation (ICRA), Singapore, 2017. VIDEO

- Robot arm for testing of touchscreen applications Achu Wilson, Aronin P, Akhil A, International Patent, WO2017051263A3

Projects

Non-Prehensile Pivoting for Manipulation of Heavy Objects Inspired by the way humans move big and heavy objects, this project investigated methods for the manipulation of objects heavier than the robot manipulator's payload. Objects were manipulated through pivoting actions that maintain edge or line contacts with the surface. Analytical solution as well as a sampling based MPC controller were developed as part of the CMU 16-741 Mechanics of Manipulation class while reinforcement learning based solutions were studied as a part of the CMU 16-845 RoboStats class. The video shows the robot moving using an analytical solution.

Cable Routing and Assembly using Tactile-driven Motion Primitives In this project, we combined tactile-guided low-level motion techniques with high-level vision-based task parsing for routing and assembling cables on a reconfigurable task board. We developed a library of library of tactile-guided motion primitives using a fingertip GelSight sensor. Each primitive reliably accomplished operations such as following along a cable, wraping it around pivots, weaving through slots, or inserting a connector.The overall task was inferred through visual perception using a given goal configuration image which was used to generate the primitive sequence. VIDEO

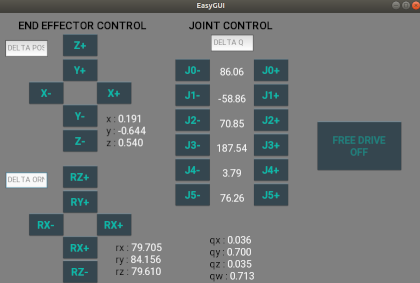

Easy_UR

easy_ur is a library that provides a straightforward way to control Universal Robots while enabling flexible control.

It offers TCP and joint level position, velocity, acceleration, servoing, and freedrive control.

An intuitive GUI is also developed using Kivy.

code: https://github.com/achuwilson/easy_ur

Non-Prehensile manipulation for pivoting around corners without slipping.

Inspired by human ability to slide an object over a surface and pivot around corners without dropping it, this project examined non-prehensile manipulation methods to achieve similar results.

In the MIT Fall 2020 6.881 Manipulation class, offered entirely online,I chose the class project to tackle this challenge.

The robot does not have the model of the object nor the environment. It kept a constant vertical force while sliding the box across the table. At the edge,

the controller estimated the box's pose and applied horizontal and vertical forces to avoid slipping.

The controller was also able to return the box from a vertical position to a horizontal position on the table.

Gripper with in-hand rolling, tactile and proximity sensing

Designed for the Kuka Innovation Award - AI Challenge 2021, this 3-DOF gripper features two GelSight tactile

sensors on the inner surface and an array of proximity sensors on the outer surface.

The fingers can be independently extended or retracted, giving the hand dexterity to perform simple in-hand rolling of objects.

This enhances the capture of rich tactile data over a larger surface not possible with a single contact.

The proximity sensors aid the robot in avoiding obstacles in low-visibility, cluttered environments.

Background Segmentation for FingerVision tactile sensor FingerVision is an optical tactile sensor that delivers RGB images of objects within the fingers along with contact forces from optical markers. To separate objects from the background captured by the sensor, a deep learning approach was applied. The process involved collecting and labeling training data, then training a U-Net-based network. The video demonstrates the RGB input on the left and the segmented objects on the right. The training achieved high accuracy within just a few tens of minutes and demonstrated good generalization.

Quasi Direct Drive Manipulator A personal project which provided me with lots of interesting challenges and learning oppertunities. The attempt to build a backdrivable quasi direct drive robot arm from grounds up was motivated by the easy availability of high torque BLDC motors, controllers and projects such as Berkeley Blue robot arm. The 7DOF robot has a spherical shoulder and a spherical wrist (not shown in video) making it dexterous. The project also received partial funding from MIT ProjX 2019.

Fully Actuated Robotic Hand With Multiple Gelsight Tactile Sensors This work details the design of a novel two finger robot gripper with multiple Gelsight based optical-tactilesensors covering the inner surface of the hand. The multiple Gelsight sensors can gather the surface topology of the object from multiple views simultaneously as well as can track the shear and tensile stress. In addition, other sensing modalities enable the hand to gather the thermal, acoustic and vibration information from the object being grasped. The force controlled gripper is fully actuated so that it can be used for various grasp configurations and can also be used for in-hand manipulation tasks.

GelSight Simulation for Sim2Real Learning. We introduce a novel approach for simulating a GelSight tactile sensor in the commonly used Gazebo simulator. Similar to the real GelSight sensor, the simulated sensor can produce high-resolution images by an optical sensor from the interaction between the touched object and an opaque soft membrane. It can indirectly sense forces, geometry, texture and other properties of the object and enables the research of Sim2Real learning with tactile sensing.

Simulation of GelSight 3D reconstruction This is one of the earliest works in simulating the behaviour of GelSight in a robotics simulator. The contact surface reconstruction capabilities of GelSight is simulated using raytracing techniques. An array of rays shooting from a rectangular sensor patch calculates and generates a PointCloud data of the contact surface. The video shows a robot gripper with the simulated sensor in pyBullet simulator grasping a coin and a sphere and generating the corresponding pointclouds which are visualized in Rviz.

Magneto-Rheological Linear Clutch This paper introduces a MR clutch which can control the force transmitted by a linear actuator. The electromechanical model of the linear clutch has been developed, implemented in hardware, and tested using a prototype one Degree of Freedom arm. The design of the clutch is detailed and the performance is characterized thorough a series of experiments. The results suggest that the linear clutch serves well for the precise force control of a linear actuator.

Predicting the Flexibility of Electric Cables using Robot Tactile Sensing and Push Primitives This project, which was done as a part of the CMU-24787 AI&ML class explored various ML techniques to learn and classify different cable types from the tactile data. The tactile data was captured from GelSight sensors when the robot grasped a cable and executed a predefined push primitive action. The classification performance of numerous shallow machine learning approaches was compared against that of a CNN. We also implemented PCA to investigate how these methods change with lower dimensional data.

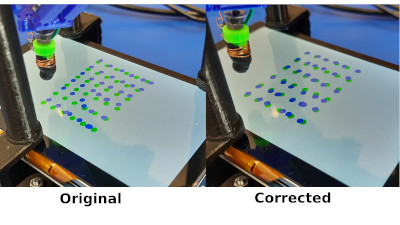

Improving the accuracy of DeltaZ robot using Machine Learning DeltaZ is an affordable, compliant, delta style, centimeter scale robot developed to teach the ideas of manipulation. This project developed methods to improve the accuracy of the robot by modelling for the errors using a neural network. The left part of image shows the robot touching on a touchscreen ( blue dots) with green dots as the goal positions. The left part shows the robot performing with the correction applied.

Superhuman scores on the 2048 game using a Robot This was a weekend project where I tried to use a sr-scara robot, computer vision, and AI techniques to play the popular 2048 game on a smartphone. The smartphone screenshot was captured using ADB, analyzed using OpenCV and a min-max algorithm was used to predict the next move. The robot then executed the move on the real device then.

SR-6D SR-6D is a lightweight manipulator aimed for small parts picking and research purposes developed at Sastra Robotics. I helped design the mechanics, developed the low level electronics, control software, high level kinematics, trajectory generation, GUI and API interfaces.

SR-SCARA. SR-SCARA was developed for high speed functional testing of devices with HMI interfaces such as touchscreens, button setc. I helped design the mechanics, developed the low level electronics, control software, high level kinematics, API interfaces, computer vision systems to estimate the test device state and spent time with customers to refine the product. These robots helped the customers ( including many global automotive OEMs) to reduce the testing time by upto 70%. I also designed a force controlled stylus which can apply arbitrary forces and is safe to use on the device under test.

My first robot: Inspired after attending a talk on ROS and watching Wall-E, I built this robot around 2010 as a way to get started with ROS. Learned how to make low level hardware communicate with ROS and got familiar with the high level tools in ROS. Trained voice control using CMU Sphinx and later implemented autonomous indoor SLAM navigation using the ROS navigation stack.

Notes

These are the tips, tricks and documentation that are automatically updated from my Obsidian notebooks and Github Readme files.